How to Choose the Best Cloud Server Plan in 2

Finding the right cloud server in 2025 can be challengi...

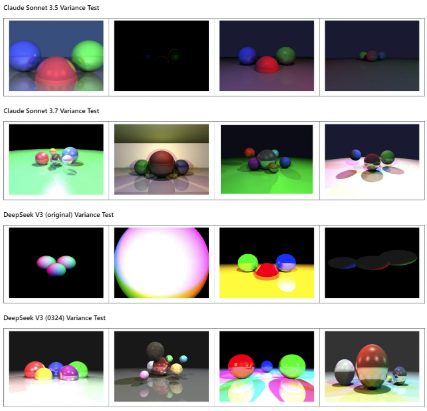

In a recent benchmark test, DeepSeek V3-0324 has shown significant improvement, now matching Sonnet 3.7 in terms of code creativity and complexity. The test involved asking various language models (LLMs) to write a raytracer in Python, capable of rendering a visually interesting scene with many colorful light sources. The goal was to evaluate how well the models could generate not only correct code but also produce aesthetically pleasing and varied outputs.

The prompt given to the models was simple but challenging: “Write a raytracer that renders an interesting scene with many colorful light sources in Python. Output an 800x600 image as a PNG.” The key challenge here was to create a scene that was visually captivating and complex, rather than just a basic example of a red, green, and blue sphere, which most models default to when generating basic raytracing code.

What stood out in the results was that while many models generated relatively simple scenes, often with poorly aligned spheres, Sonnet 3.5 and Sonnet 3.7 produced more complex and creative results. These versions of Sonnet were able to generate richer, more aesthetically varied scenes, often with improved color handling and visual complexity. This was especially noticeable in the larger file sizes of the outputs, indicating a more detailed rendering process. The creative leap demonstrated by Sonnet 3.7 suggested that Anthropic had found a way to boost the model's ability to generate more creative code and produce aesthetically pleasing outputs.

However, in the latest test with DeepSeek V3-0324, it was clear that this version had caught up to Sonnet 3.7. DeepSeek V3-0324 exhibited similar levels of creativity and visual quality in its code generation, indicating a significant improvement over its previous versions. In fact, the results suggest that DeepSeek is now on par with, if not exceeding, the previous benchmark set by Sonnet 3.7 in this specific task.

For a deeper dive into the benchmark data and further insights, you can check out the original post on Reddit here, which includes additional test results and more details.

Additionally, for those interested in the visual performance of the models, the benchmark data can be viewed through this link.

This progress highlights how language models are evolving not only in their ability to write functional code but also in generating more visually compelling and creative results. As AI continues to improve, we can expect even more sophisticated and aesthetically driven outputs in future benchmarks.

Q1: What is DeepSeek V3-0324 and how does it compare to Sonnet 3.7?

A1: DeepSeek V3-0324 is the latest version of the DeepSeek language model, which has shown significant improvements in its ability to generate creative code. In a recent benchmark test, it has matched or even surpassed Sonnet 3.7 in terms of code creativity, especially in generating visually interesting and complex raytracing scenes in Python. This marks a big leap over its previous versions, showcasing the model’s enhanced capability in producing both functional and aesthetically pleasing outputs.

Q2: What does the benchmark test entail?

A2: The benchmark test involves prompting language models to write a raytracer in Python that renders an interesting scene with multiple colorful light sources. The models are asked to output an 800x600 PNG image, and the test evaluates their creativity in generating visually compelling scenes, not just simple code. The focus is on how well the models create complex and varied visual elements rather than defaulting to simple designs.

Q3: Why is code creativity important in AI models like DeepSeek and Sonnet?

A3: Code creativity is a vital measure of an AI model’s ability to produce innovative and functional code that goes beyond standard solutions. In tasks like writing a raytracer, creativity can lead to more visually captivating and complex outputs. For developers and businesses relying on AI for code generation, this increased creativity means models can handle more diverse and sophisticated tasks, providing better results and improving overall user experience.

Q4: Where can I find more information and test results about DeepSeek V3-0324 and Sonnet 3.7?

A4: More information about the DeepSeek V3-0324 and Sonnet 3.7 benchmark results can be found in the original Reddit post here. For additional visual data and performance metrics, you can check out the benchmark image here.

The content of the original Reddit post is as follows:

A while ago I set up a code creativity benchmark by asking various LLMs a very simple prompt:

> Write a raytracer that renders an interesting scene with many colourful lightsources in python. Output a 800x600 image as a png

I only allowed one shot, no iterative prompting to solve broken code. What is interesting is that most LLMs generated code that created a very simple scene with a red, green and blue sphere, often also not aligned properly. Assumingly, the simple RGB example is something that is often represented in pretraining data.

Yet, somehow Sonnet 3.5 and especially Sonnet 3.7 created programs that generated more complex and varied scenes, using nicer colors. At the same time the filesize also increased. Anthropic had found some way to get the model to increase the creativity in coding and create more asthetic outcomes - no idea how to measure this other than looking at the images. (Speculation about how they did it and more ideas how to measure this are welcome in the comments)

Today I tested DeepSeek V3 0324 and it has definitely caught up to 3.7, a huge improvement over V3!

Benchmark data and more information here

Finding the right cloud server in 2025 can be challengi...

High-Performance Computing (HPC) in the cloud has becom...

E-commerce websites are not like personal blogs. They a...