If you’re weighing where to host Web3 nodes, you’ll quickly find that privacy/KYC, payment rails, and acceptable-use policies matter as much as performance. Here’s the short version:

If your overseas ops team needs a reliable way to run marketplace dashboards, ad consoles, or customer support tools from anywhere, a well‑configured Windows RDP VPS is a practical choice. This step‑by‑step guide shows you how to provision, secure, and

The dominance of Amazon Web Services (AWS) is undeniable, but for many users, the complexity of its billing and the high cost of Windows instances are becoming significant pain points. As cloud maturity grows, many tech leaders are looking for

In the rapidly evolving digital landscape, having a reliable, high-performance virtual environment is no longer a luxury—it is a necessity. For many businesses and developers, Windows remains the operating system of choice due to its compatibility with enterprise software, .NET

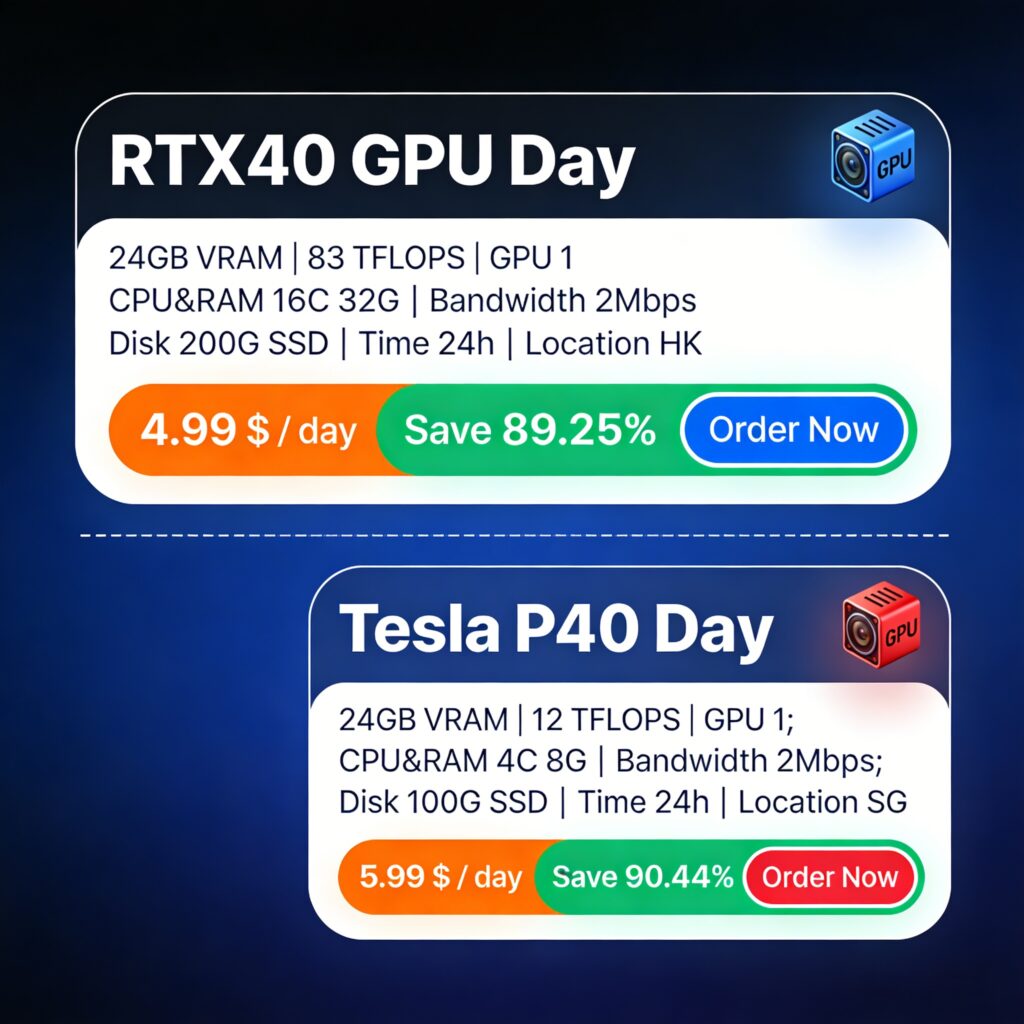

Predictable GPU costs make or break early AI projects. If you can lock in monthly rates and renew at the same price, you can plan experiments, launch pilots, and scale production without budget whiplash. On select monthly plans, the current

Understand what a Windows VPS is, how it works, and when to use it. Learn how SurferCloud offers Windows VPS with a free Windows system, crypto payments, and no forced KYC.

API penetration testing finds authorization flaws, shadow APIs, and business-logic bugs to secure cloud services, reduce breach risk, and support compliance.

Zero Trust orchestration across AWS, Azure, and multi-cloud environments—identity-driven policies, micro-segmentation, scalable access controls, and implementation trade-offs.

Compare VPS vs shared hosting for privacy-focused websites. Learn which option offers better control, data protection, and flexibility.

Step-by-step tactics to raise cache hit rates—set proper Cache-Control, normalize cache keys, use versioning, origin shielding, and monitor analytics.